I am a Director in the Science team at Wayve AI in London, UK.

Previously, I was the Senior Director of Computer Vision at the Allen Institute for AI (AI2) in Seattle. I am also an Affiliate Associate Professor at the Computer Science & Engineering department at the University of Washington.

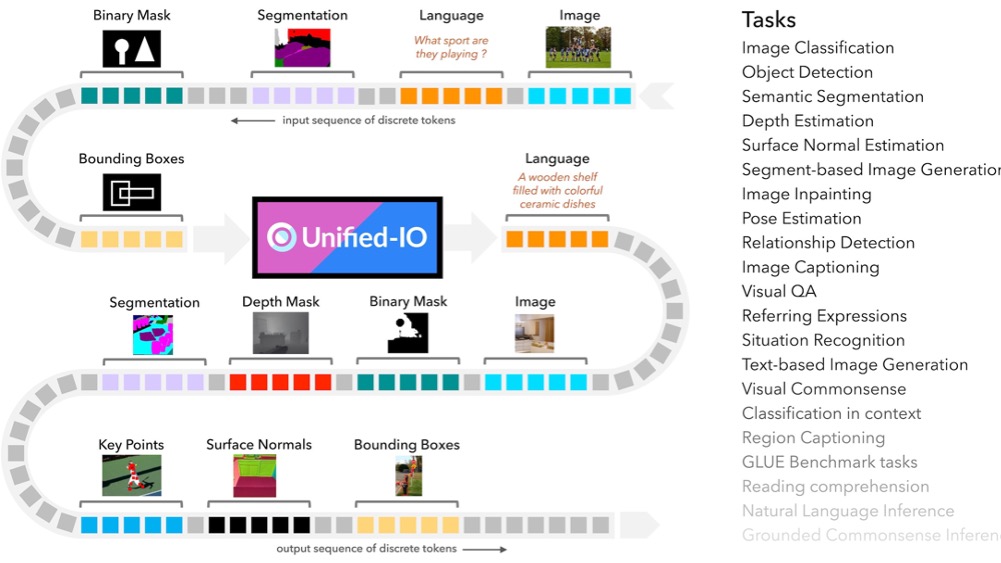

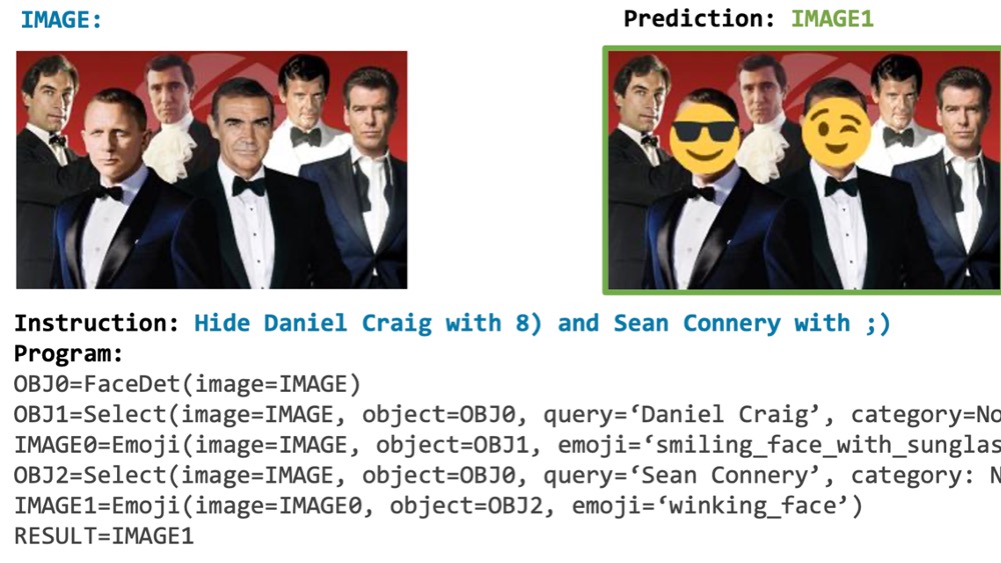

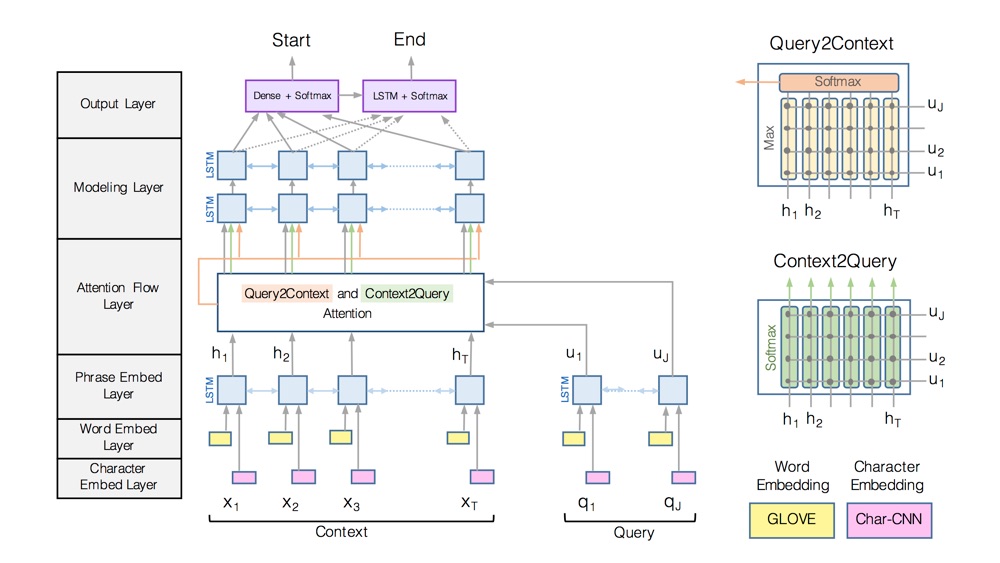

I enjoy building and deploying AI applications for users to interact with. My work over two decades spans computer vision, robotics and natural language processing.

I got my Ph.D. at the University of Maryand, College Park under the supervision of Prof. Larry S. Davis, and also spent five years at Microsoft working in Image and Video Search.

Google Scholar